When might AI truly transform our world, and what will that transformation look like? The Epoch AI research team focuses on the future of AI. They have a mission of understanding the future of AI.

They have disagreement about timelines for AGI. Two Epoch AI researchers with relatively long and short AGI timelines candidly examine the roots of their disagreements. Ege and Matthew dissect each other’s views, and discuss the evidence, intuitions and assumptions that lead to their timelines diverging by factors of two or three for key transformative milestones.

The hosts discuss:

• Their median timelines for specific milestones (like sustained 5%+ GDP growth) that highlight differences between optimistic and cautious AI forecasts.

• Whether AI-driven transformation will primarily result from superhuman researchers (a "country of geniuses") or widespread automation of everyday cognitive and physical tasks.

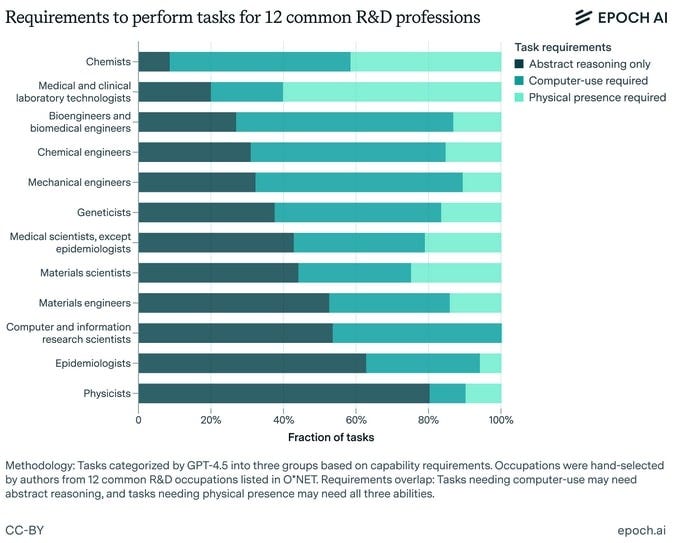

• Moravec's Paradox today: Why practical skills like agency and common sense remain challenging for AI despite advancements in reasoning, and how this affects economic impact.

• The interplay of hardware scaling, algorithmic breakthroughs, data availability (especially for agentic tasks), and the persistent challenge of transfer learning.

• Prediction pitfalls and why conventional academic AI forecasting might miss the mark.

• A world with AGI: moving from totalizing "single AGI" or "utopia vs. doom" narratives to consider economic forces, decentralized agents, and the co-evolution of AI and society.

<iframe width="560" height="315" src="

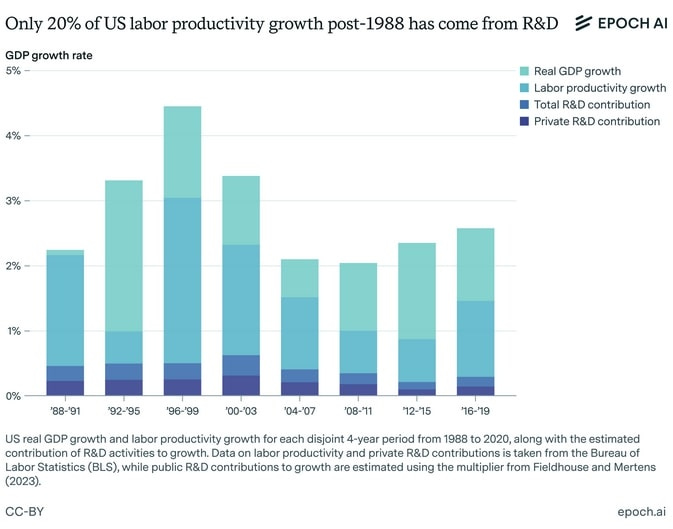

The value of AI will be driven by broad economic deployment, and while we should expect this to lead to an increase in productivity and output per person due to increasing returns to scale, most of this increase will probably not come from explicit R&D. The primary economic impact of AI will be its ability to broadly automate labor.

The real reason AI benchmarks haven’t reflected economic impacts.

People rarely had the goal of measuring the real-world impacts of AI systems, which would require substantial resources to capture the complexities of real-world tasks. Instead, benchmarks have largely been optimized for other purposes, like comparing the relative capabilities of models on tasks that are “just within reach”. As AI systems become increasingly capable and are used in more sectors of the economy, researchers have stronger incentives to build benchmarks that capture these kinds of real-world economic impacts.Making realistic and useful benchmark is a hard problem.

AI Progress is about to speed up.

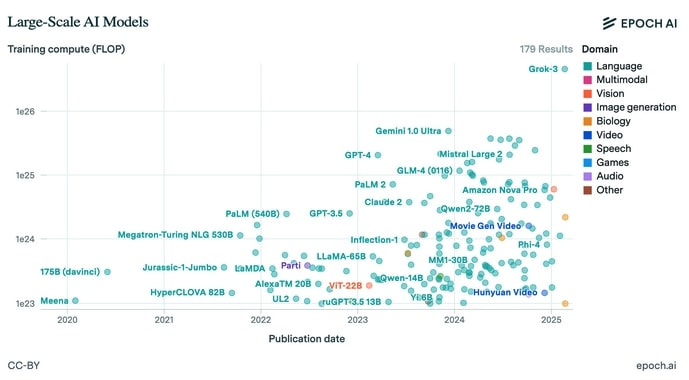

The release of GPT-4 in March 2023 stands out because GPT-4 represented a 10x compute scale-up over the models we had seen before. Since then, we’ve not seen another scale-up of this magnitude: all currently available frontier models, with the exception of Grok 3, have been trained on a compute budget similar to GPT-4 or less. For instance, Dario Amodei, CEO of Anthropic, recently confirmed that the training cost of Claude 3.5 Sonnet was in the tens of millions of dollars, and based on trends in GPU price-performance this means it was likely trained using around twice the compute that GPT-4 used.

Starting with Grok 3, the next generation of models that will be released by frontier labs this year are going to crack this barrier and represent more than an order of magnitude scale-up over GPT-4, and perhaps two orders of magnitude when it comes to reasoning RL. Based on past experience with scaling, we should expect this to lead to a significant jump in performance, at least as big as the jump from GPT-3.5 to GPT-4.

What to expect from the new models

On the macro scale, [EpochAI] expect the incoming new generation of models to be trained on around 100K H100s, possibly with 8-bit quantization, with a typical training compute of around 3e26 FLOP.

NOTE: xAI is expanding its data center to 400k GPU (200K B200 and 200K H100/H200 or about 12X the compute of the 100K H100). This would be 3.6e27 FLOP. This will be ready in 2-3 months. By the end of 2025 or Q1 2026, xAI will be about 1M GPU and those should be B200/B300/Dojo 2 (1.5e28 FLOP).

The 100K H100s models are initially going to be perhaps an order of magnitude bigger than GPT-4o in total parameter count so we’ll probably see a 2-3x increase in the API token prices and around 2x slowdown in short context decoding speed when the models are first released, though these will improve later in the year thanks to inference clusters switching to newer hardware and continuing algorithmic progress.

The industry as a whole will continue growing, perhaps at a slightly slower pace than before. Epoch AI median forecast for OpenAI’s 2025 revenue is around $12B (in line with OpenAI’s own forecasts), with around $4.5B of that coming from the last quarter of the year. Revenue growth overall to be primarily driven by improvements in agency and maintaining coherence over long contexts, which we’re already seeing some signs of with products like Deep Research and Operator. These appear to be more important than gains in complex reasoning, though less well measured by concrete benchmarks available today.

If we take the high end of the algorithmic progress rate estimates and assume we’ve had an efficiency doubling every 5 months since GPT-4 came out, then a 10x compute scale-up corresponds roughly to 17 months of algorithmic progress in its impact on model quality. We’ll also have another 10 months of algorithmic progress until the end of 2025, which means the total progress we’ll see until the end of 2025 will be worth more than 2 years of algorithmic progress, i.e. more than the progress we’ve seen since GPT-4 when compute expenditures have been fairly flat.

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.