There were major developments this week in AI, AI startups, AI research and AI funding.

Google Pixel 9 added AI processing, AI chip and Features

OpenAI enables companies to customize the LLM

AI Research Improves Reasoning, Agents and Optimization

Major recent AI funding announcements

Google's Pixel 9 AI Enhancements: Google unveiled its Pixel 9 smartphones with a significant focus on AI capabilities.

Google Tensor G4: This processor is designed for efficiency and runs Gemini Nano with Multimodality, enabling the phone to understand and process text, images, sound, and spoken language more effectively.

Google AI and Gemini Integration: The Pixel 9 series features what's referred to as Google AI which includes the integration of Gemini, an AI model, for various functionalities. This includes:

Add Me function for the camera, allowing users to insert themselves into group 1. photos seamlessly after the fact.

Pixel Screenshots which uses AI to understand and organize screenshot content, enabling users to ask questions about the information within them.

Call and Communication Features:

Call Notes: Provides summaries, transcripts, and even attempts to extract key information from phone calls, although with noted inaccuracies in some instances.

General AI Assistance:

Gemini Nano with Multimodality: This allows for on-device AI processing, significantly improving the speed and capability of AI functions, like generating text or understanding complex queries up to 45 tokens per second.

OpenAI's Customization for Businesses: OpenAI has started allowing businesses to customize its most powerful AI models, indicating a move towards more tailored AI solutions for enterprise needs.

Customization of GPT-4o: OpenAI now allows corporate customers to fine-tune GPT-4o with their own company data. This feature, known as fine-tuning, enables businesses to tailor the AI model to better fit their specific needs, enhancing the model's performance with proprietary data.

AI-as-a-Service Feature: This new capability is being described as an AI-as-a-Service feature, where businesses can optimize their AI investments by customizing the model directly for customer service efficiency or other business-specific applications.

AI Research Improves Reasoning, Agents and Optimization

Mutual Reasoning Makes Smaller LLMs Stronger Problem Solvers.

This paper introduces rStar, a self-play mutual reasoning approach that significantly improves reasoning capabilities of small language models (SLMs) without fine-tuning or superior models. rStar decouples reasoning into a self-play mutual generation-discrimination process. First, a target SLM augments the Monte Carlo Tree Search (MCTS) with a rich set of human-like reasoning actions to construct higher quality reasoning trajectories. Next, another SLM, with capabilities similar to the target SLM, acts as a discriminator to verify each trajectory generated by the target SLM. The mutually agreed reasoning trajectories are considered mutual consistent, thus are more likely to be correct. Extensive experiments across five SLMs demonstrate rStar can effectively solve diverse reasoning problems, including GSM8K, GSM-Hard, MATH, SVAMP, and StrategyQA. Remarkably, rStar boosts GSM8K accuracy from 12.51% to 63.91% for LLaMA2-7B, from 36.46% to 81.88% for Mistral-7B, from 74.53% to 91.13% for LLaMA3-8B-Instruct.

Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters

How much an LLM can be improved provided a fixed amount of inference-time compute. Finds that the effectiveness of different scaling approaches varies by the difficulty of the prompt. It then proposes an adaptive compute-optimal strategy that can improve efficiency by more than 4x compared to a best-of-N baseline. Reports that in a FLOPs-matched evaluation, optimally scaling test-time compute can outperform a 14x larger model.

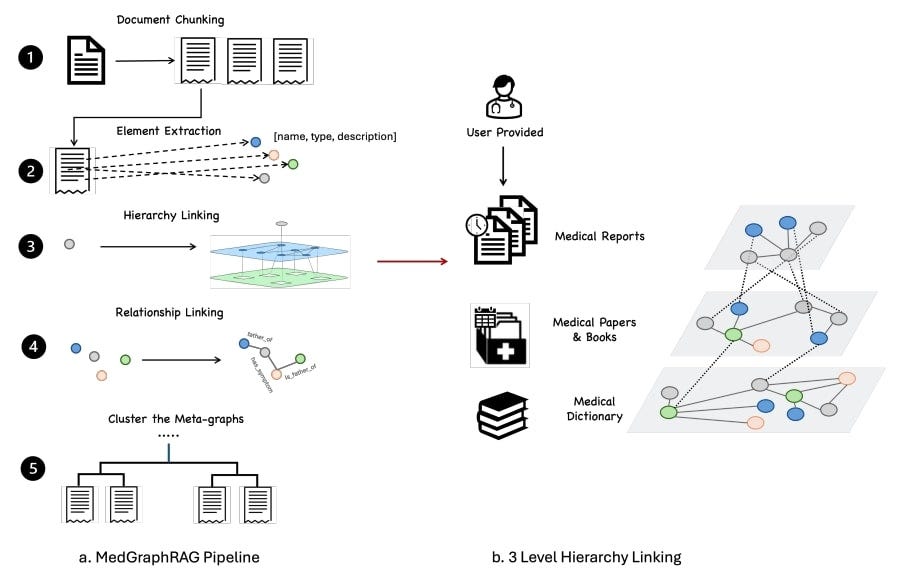

MedGraphRAG a graph-based framework for the medical domain with a focus on enhancing LLMs and generating evidence-based results. Leverages a hybrid static-semantic approach to chunk documents to improve context capture; entities and medical knowledge are represented through graphs which leads to an interconnected global graph; this approach improves precision and outperforms state-of-the-art models on multiple medical Q and A benchmarks.

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.