This is the first in a weekly series focused on major developments in AI, AI startups, AI research and AI funding.

There are several major things we will look at:

Google hires Character.ai founders and top researchers

SearchGPT is not yet good enough to beat Google search

Scaling AI and Paths to Trillions and Superintelligent AI

Major AI funding

Google Hires Character.ai Founders and Researchers and Pays Out Character.ai Investors

Character.ai entered into an agreement with Google. As part of this agreement, Character.AI will provide Google with a non-exclusive license for its current LLM technology. Character.AI will continue growing and to focus on building personalized AI products for users around the world.

Investors in Character.AI are being paid for the value of their equity by Google at a valuation of $2.5 billion. Employees are receiving cash at that valuation matching their vested shares. They will keep getting payments at that price as their existing stock grants continue to vest. Character.AI had raised $150 million to date and its last known valuation was $1 billion. Investors like Andreessen Horowitz are getting 2.5X their investment.

Cofounders Noam, Daniel, and about 30 members of the research team will also join Google. In 2021, Noam Shazeer and Daniel De Freitas left Google to start Character.ai because there were frustrated by the search giant’s bureaucracy.

The Google-Character.ai deal is similar to Open.ai partnering closely with Microsoft.

SearchGPT is not yet good enough to beat Google search

The BG2 podcast and Altimeter Capital research is showing that current generation of searchGPT are not enough to displace Google Search. This could change with new voice capabilities and adding memory to the generative ai systems. The next phase of foundational model training needs another $10 billion of infrastructure and the phase after that will be about $30-60 billion of infrastructure.

The Google-Character.ai deal is similar to Open.ai partnering closely with Microsoft.

Scaling AI and Paths to Trillions and Superintelligent AI

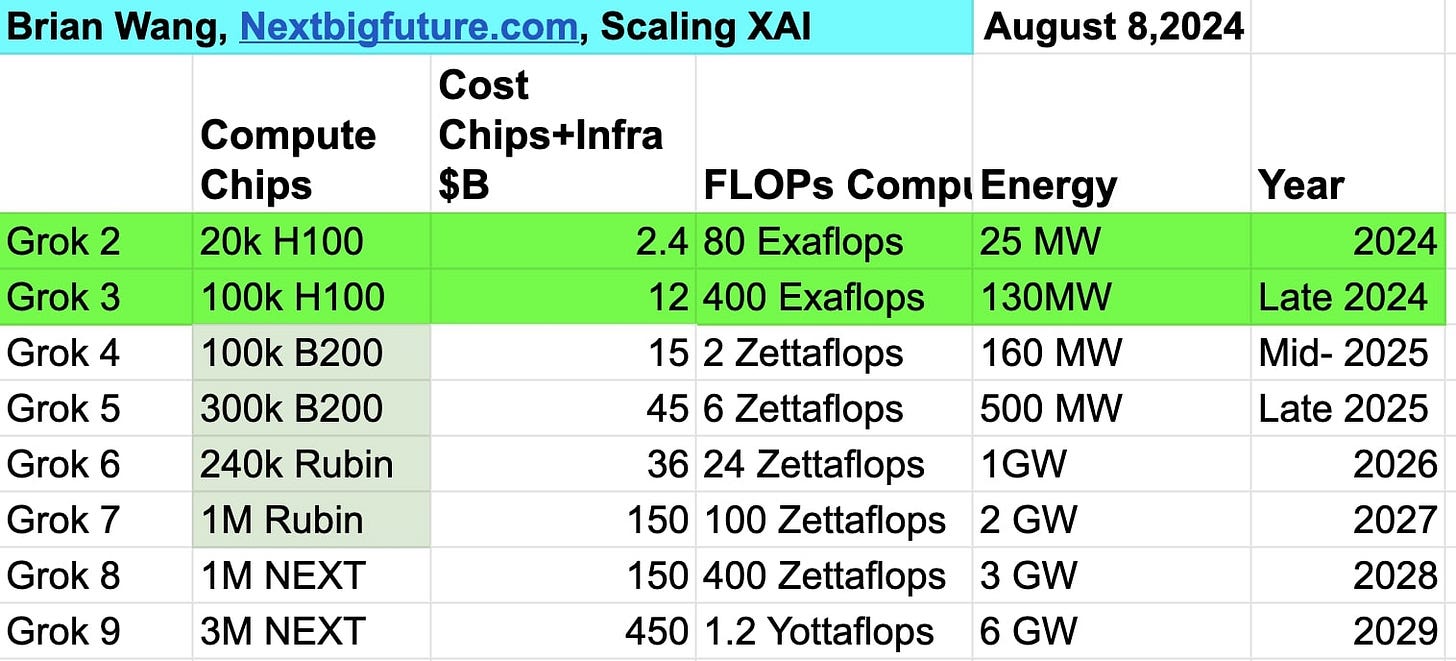

Here is an estimate of the costs to get the chips, pay for the energy and infrastructure to train large language models. Elon Musk has described going to 300,000 Nvidia B200 chips in mid 2025. This could be about $45 billion. It could be possible to get two models trained as each major round of chips are built and used for AI training.

The scaling of chip improvement, numbers of chips and energy for data centers could continue to enable more than 6 cycles of LLM AI training. The continued advancement in AI capabilities would enable AI able to surpass Google search engine utility and systems able to largely replace programmers or to enhance programmer productivity by hundreds of times. Those AI capabilities would be worth trillions.

We know from the Google Deepmind Alphaproof and Alpha geometry that advanced math and reasoning are possible for llm systems and certainly llm systems combined with Alphazero type AI.

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.