Quantinuum Trapped Ion Quantum Computer Compute World Record 100 Times Faster Than Google Sycamore

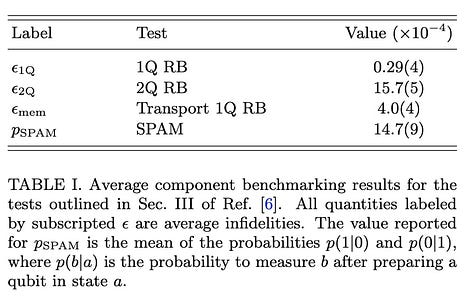

Quantum computing researchers are exploring whether quantum computational advantage might be obtainable without quantum error correction. The larger one can make a quantum computer while continuing to push down error rates the more likely it is that we will find tasks at which non-error-corrected quantum computers dramatically outperform the best classical algorithms. The high gate fidelities and arbitrary connectivity afforded by the trapped-ion QCCD architecture have enabled RCS to be carried out in a computationally challenging regime and at completely unprecedented fidelities, leaving open considerable room to scale such demonstrations up even without further progress in reducing gate error rates.

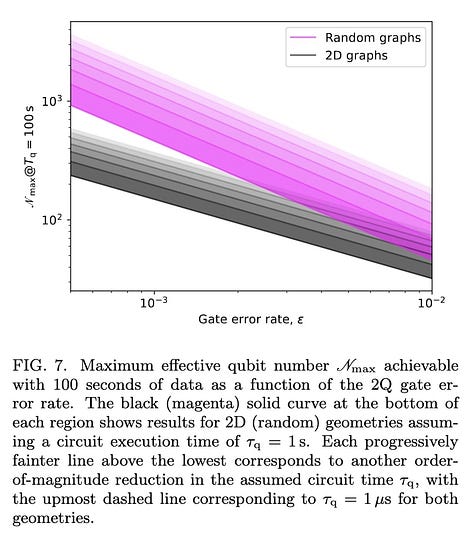

Quantinuum is confident that H2 is by no means near the boundary of how far such demonstrations can be pushed using the QCCD architecture. For example, increasing system sizes while also reducing memory errors, circuit times, and 2Q error rates all appear achievable with current technologies by moving towards natively 2D trapping architectures, and switching to qubit ions (such as 137Ba+) that afford better SPAM fidelities than 171Yb+ and admit visible wavelength laser-based 2Q gates with more favorable error budgets. Given that RG circuits require such low depths to become hard to simulate (and a fixed fraction of qubits contribute to the worst-case hardness of simulation even as N is scaled up at constant depth), near-term scaling progress in the QCCD architecture should enable the faithful execution of quantum circuits whose simulation lies well beyond the reach of any conceivable classical calculation.

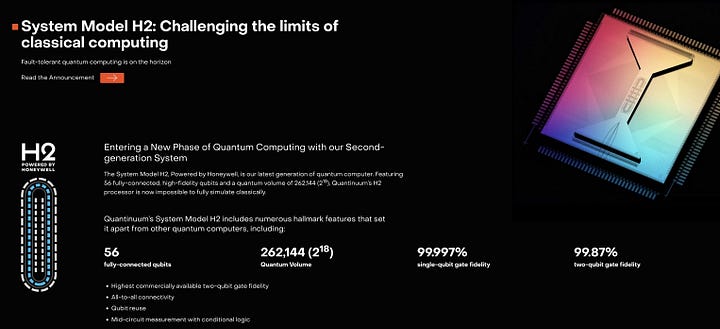

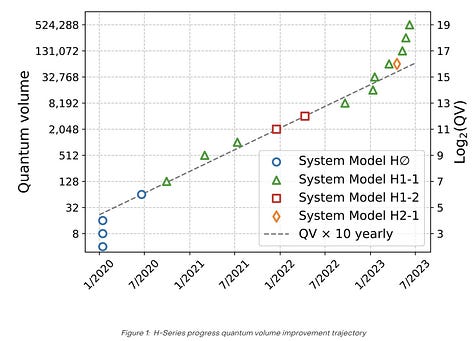

An upgraded Quantinuum H2 trapped-ion QCCD quantum computer enabled it to operate with up to 56 qubits while maintaining arbitrary connectivity and improving upon the high two-qubit gate fidelities. As a test of the H2 quantum computer’s capabilities, they implemented RCS in randomly assigned geometries.

So what of the comparison between the H2-1 results and a classical supercomputer?

A direct comparison can be made between the time it took H2-1 to perform RCS and the time it took a classical supercomputer. However, classical simulations of RCS can be made faster by building a larger supercomputer (or by distributing the workload across many existing supercomputers). A more robust comparison is to consider the amount of energy that must be expended to perform RCS on either H2-1 or on classical computing hardware, which ultimately controls the real cost of performing RCS. An analysis based on the most efficient known classical algorithm for RCS and the power consumption of leading supercomputers indicates that H2-1 can perform RCS at 56 qubits with an estimated 30,000x reduction in power consumption. These early results should be seen as very attractive for data center owners and supercomputing facilities looking to add quantum computers as “accelerators” for their users.

Where we go next

Today’s milestone announcements are clear evidence that the H2-1 quantum processor can perform computational tasks with far greater efficiency than classical computers. They underpin the expectation that as our quantum computers scale beyond today’s 56 qubits to hundreds, thousands, and eventually millions of high-quality qubits, classical supercomputers will quickly fall behind. Quantinuum’s quantum computers are likely to become the device of choice as scrutiny continues to grow of the power consumption of classical computers applied to highly intensive workloads such as simulating molecules and material structures – tasks that are widely expected to be amenable to a speedup using quantum computers.

With this upgrade in our qubit count to 56, we will no longer be offering a commercial “fully encompassing” emulator – a mathematically exact simulation of our H2-1 quantum processor is now impossible, as it would take up the entire memory of the world’s best supercomputers. With 56 qubits, the only way to get exact results is to run on the actual hardware, a trend the leaders in this field have already embraced.

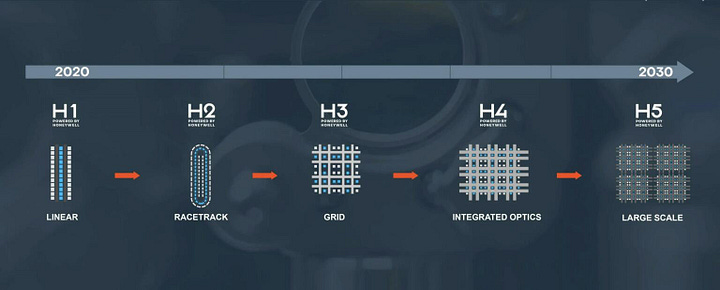

The different architectures of the H2 and the planned H3, H4 and H5 are all steps to master simpler enabling capabilities before adding new features. The H3 is a grid system which will add tighter control of the ion qubits. The H4 system will build in control lasers into the processors. This will all lead to large scale quantum processors.

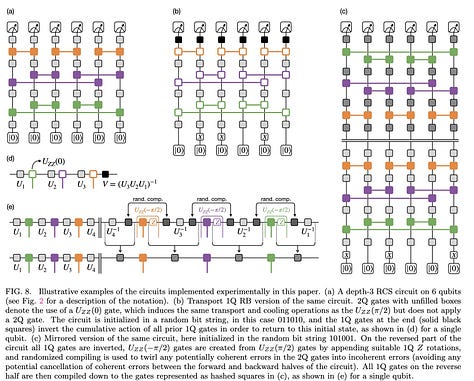

Arbitrary connectivity together with the high gate fidelities achieved on the H2 quantum computer enable sampling from classically-challenging circuits in an unprecedented range of fidelity: Circuits deep enough to saturate the cost of classical simulation by exact tensor network contraction (assuming no memory constraints) can be executed without a single error about 35% of the time. Historically, the most significant loophole in the claim that RCS is classically hard in practice stems from the low circuit fidelities that have been achievable for circuits deep enough to become hard to simulate classically. The high fidelities achieved in this work appear to firmly close this loophole. Unlike previous RCS demonstrations, in which circuits have been carefully defined in reference to the most performant achievable gates, the circuits are comprised of natural perfect entanglers (equivalent to control-Z gates up to single-qubit rotations) and Haar-random single-qubit gates. All circuits are run “full stack” with the default settings that would be applied to jobs submitted by any user of H2, without any special purpose compilation or calibration. They conclude that even with 56 qubits, the computational power of H2 for RCS is strongly limited by qubit number and not fidelity or clock speed, with the implication that the separation of computational power between QCCD-based trapped-ion quantum computers and classical computers will continue to grow very rapidly as the qubit number continues to be scaled up.

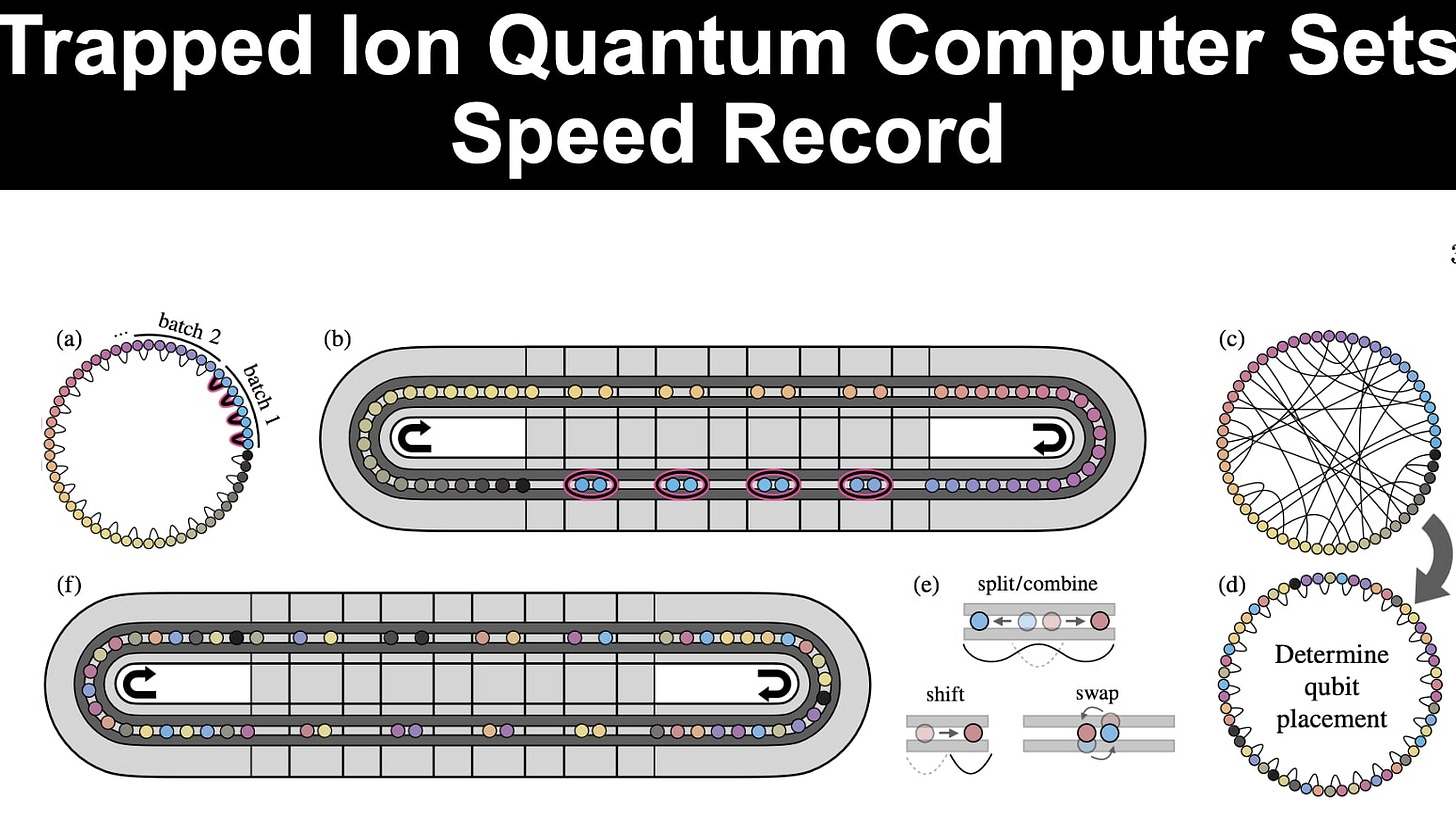

The H2-series quantum computers are built around a race track-shaped surface-electrode trap.

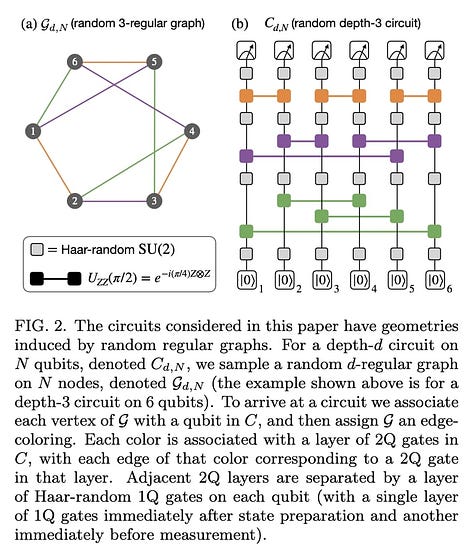

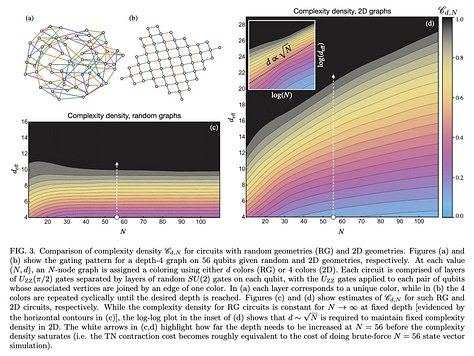

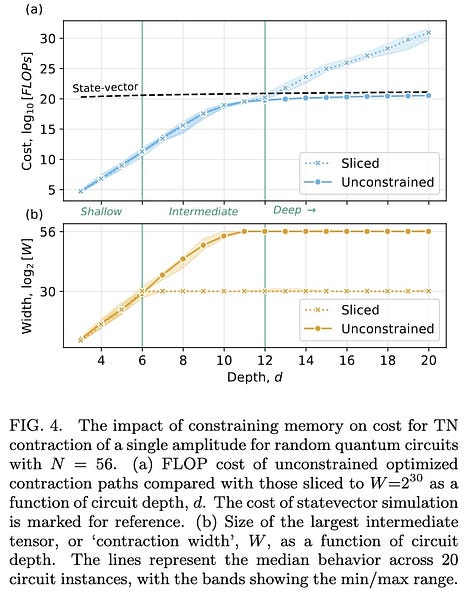

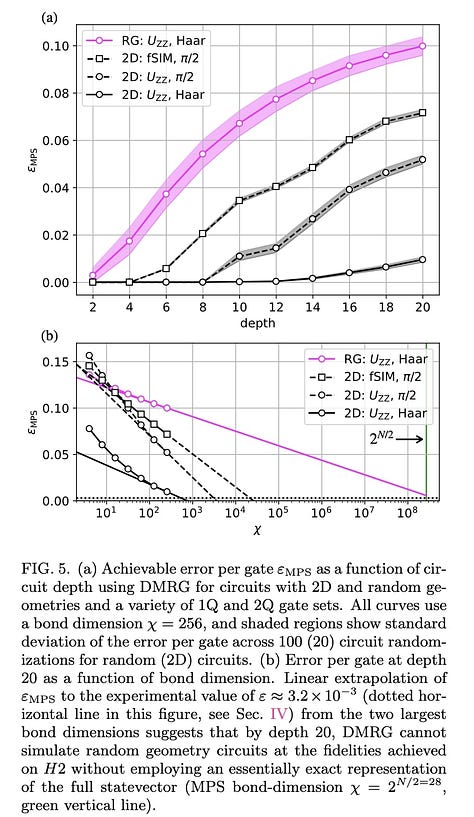

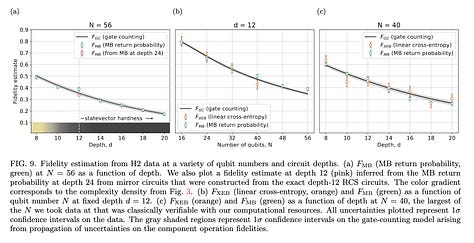

Empirical evidence for a gap between the computational powers of classical and quantum computers has been provided by experiments that sample the output distributions of two-dimensional quantum circuits. Many attempts to close this gap have utilized classical simulations based on tensor network techniques, and their limitations shed light on the improvements to quantum hardware required to frustrate classical simulability. In particular, quantum computers having in excess of ∼ 50 qubits are primarily vulnerable to classical simulation due to restrictions on their gate fidelity and their connectivity, the latter determining how many gates are required (and therefore how much infidelity is suffered) in generating highly-entangled states. Here, we describe recent hardware upgrades to Quantinuum’s H2 quantum computer enabling it to operate on up to 56 qubits with arbitrary connectivity and 99.843(5)% two-qubit gate fidelity. Utilizing the flexible connectivity of H2, they present data from random circuit sampling in highly connected geometries, doing so at unprecedented fidelities and a scale that appears to be beyond the capabilities of state-of-the-art classical algorithms. The considerable difficulty of classically simulating H2 is likely limited only by qubit number, demonstrating the promise and scalability of the QCCD architecture as continued progress is made towards building larger machines.

<img class="alignnone size-large wp-image-196464" src="https://nextbigfuture.s3.amazonaws.com/uploads/2024/07/Screen-Shot-2024-07-11-at-11.08.09-PM.jpg" alt="" width="862" height="552" />

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.