Outline of AI for Self Improving Science and AI

AI capabilities are exploding exponentially, but the speed of research is limited by human in the loop and human driven research. Researchers have unveiled ASI-Arch a proposed architecture of systems for AI research. It is a proposed full paradigm shift to automated innovation, where AI handles the entire scientific process end-to-end.

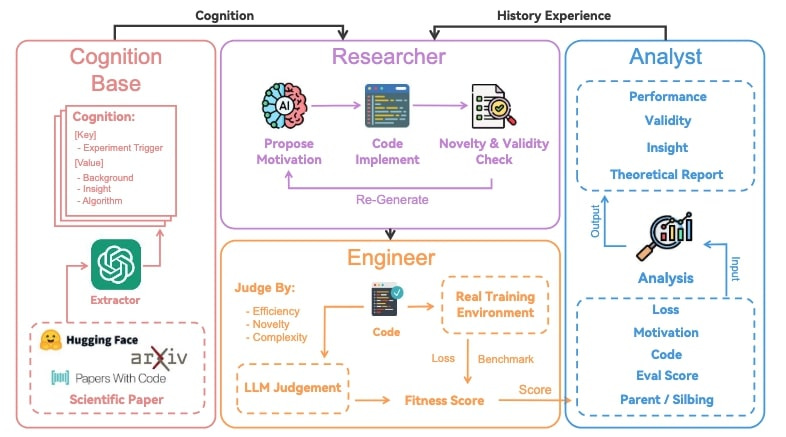

An overview of our…

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.