OpenAI Releases O3 Model With High Performance and High Cost

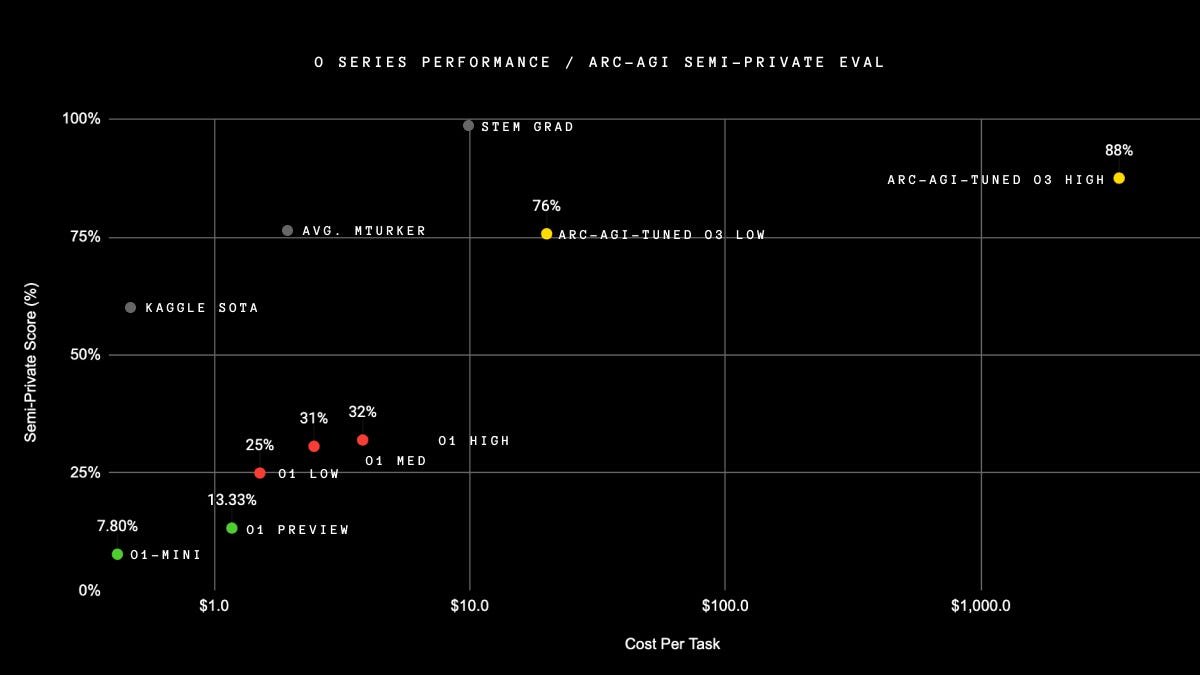

OpenaI o3 sets new records in several key areas, particularly in reasoning, coding and mathematical problem-solving. It scores 75.7% on the semi-private eval in low-compute mode (for $20 per task in compute ) and 87.5% in high-compute mode (thousands of $ per task). It's very expensive. It is not just brute force. These capabilities are new territory and they demand serious scientific attention.

Benchmark Performance

ARC-AGI Benchmark

o3 has achieved a breakthrough score on the ARC-AGI benchmark, which is considered an indicator of progress toward artificial general intelligence:

o3 scored 75.7% using standard computing power

With increased resources (high-compute mode), o3 reached an unprecedented 87.5%

This performance surpasses the human-level threshold of 85% and represents a significant leap from its predecessor, o1, which only scored 32%

Mathematics and Problem-Solving

o3 has great mathematical reasoning and problem-solving:

Nearly perfect score (96.7%) on the 2024 American Mathematical Olympiad (AIME)

25.2% on EpochAI's Frontier Math Benchmark, far exceeding previous models that couldn't break 2%

Coding and Software Engineering

In coding-related tasks, o3 shows substantial improvements:

SWE-Bench Verified: 71.7, which is 22.8 points higher than o1

Codeforces: Achieved an Elo rating of 2,727

Other Notable Benchmarks

GPQA Diamond: 87.7%, compared to o1's 78%

Comparison with Gemini 2 and Other Models

While o3 demonstrates exceptional performance, Gemini 2 and other models also show strong capabilities:

Gemini 2.0 Flash

Outperforms its predecessor Gemini 1.5 Pro on key benchmarks6

Excels in competition-level math problems, achieving state-of-the-art results on MATH and HiddenMath6

Performs well in language and multimedia understanding, outperforming GPT-4o on MMLU-Pro6

Model Rankings

In various benchmarks and comparisons:

Chatbot Arena: Gemini 2.0 Experimental Advanced ranks slightly above the latest version of OpenAI's ChatGPT-4o3

MMLU-Pro: Gemini 2.0 Flash outperforms GPT-4o but is behind Claude 3.5 Sonnet

Coding ability: Claude 3.5 Sonnet, GPT-4o, o1-preview, and o1-mini outperform Gemini 2.0 Flash

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.