Open Source and China Have Caught Up With OpenAI

100 person China startup DeepSeek just released the first Open Source Reasoning Model that matched the OpenAI o1 reasoning model. OpenAI was charging $200 per month to use the OpenAI o1 pro model.

How did an unknown, 100 person startup with $0 VC funding produce a frontier open source model that rivaled OpenAI and Anthropic at 1/10th of the training cost and is 20-50x cheaper during inference?

Highlights

The success of DeepSeek suggests they need to hire young outsiders. New-grads who are brilliant, passionate and curious over experienced AI Researchers. There needs to be more research and experimentation with different AI approaches.

China has caught up in AI.

Open Source has caught up in AI

The price of AI (million tokens) has collapsed by 50 times.

They distilled models. They made other models smaller and better. We can run near Open O1 class models with $1000-2000 worth of hardware.

They still needed a decent amount of hardware (10000 A100 but this is about 100 times less than competitors).

Story of DeepSeek

In 2007, three engineers Xu Jin, Zheng Dawei, and Liang Wenfeng (CEO) met at Zhejiang University and bonded over algorithmic trading. Their idea? Build a quant fund powered by cutting-edge AI. But instead of hiring industry veterans, they prioritized raw talent and curiosity over experience. Liang: “Core technical roles are primarily filled by recent grads or those 1–2 years out."

They launched the algorithmic trading in 2015 and by 2021 had made $140 million.

They owned 10,000 NVIDIA A100 GPUs and became a top 4 quant fund with $15 billion assets under management. They lost a lot of money in 2022.

In 2023, Instead of giving up, they pivoted. They spun out Deepseek, an AI lab fueled by their existing talent and 10k GPUs. No VC funding. They went all-in. The twist? They kept their same hiring philosophy of hiring outsiders: new-grads who are brilliant, passionate and curious over experienced AI Researchers.

Frontier Open Source Model

On Christmas, they shocked the AI world with Deepseek v3:

- Trained for just $6M but rivaled ChatGPT-4o and Claude 3.5 Sonnet.

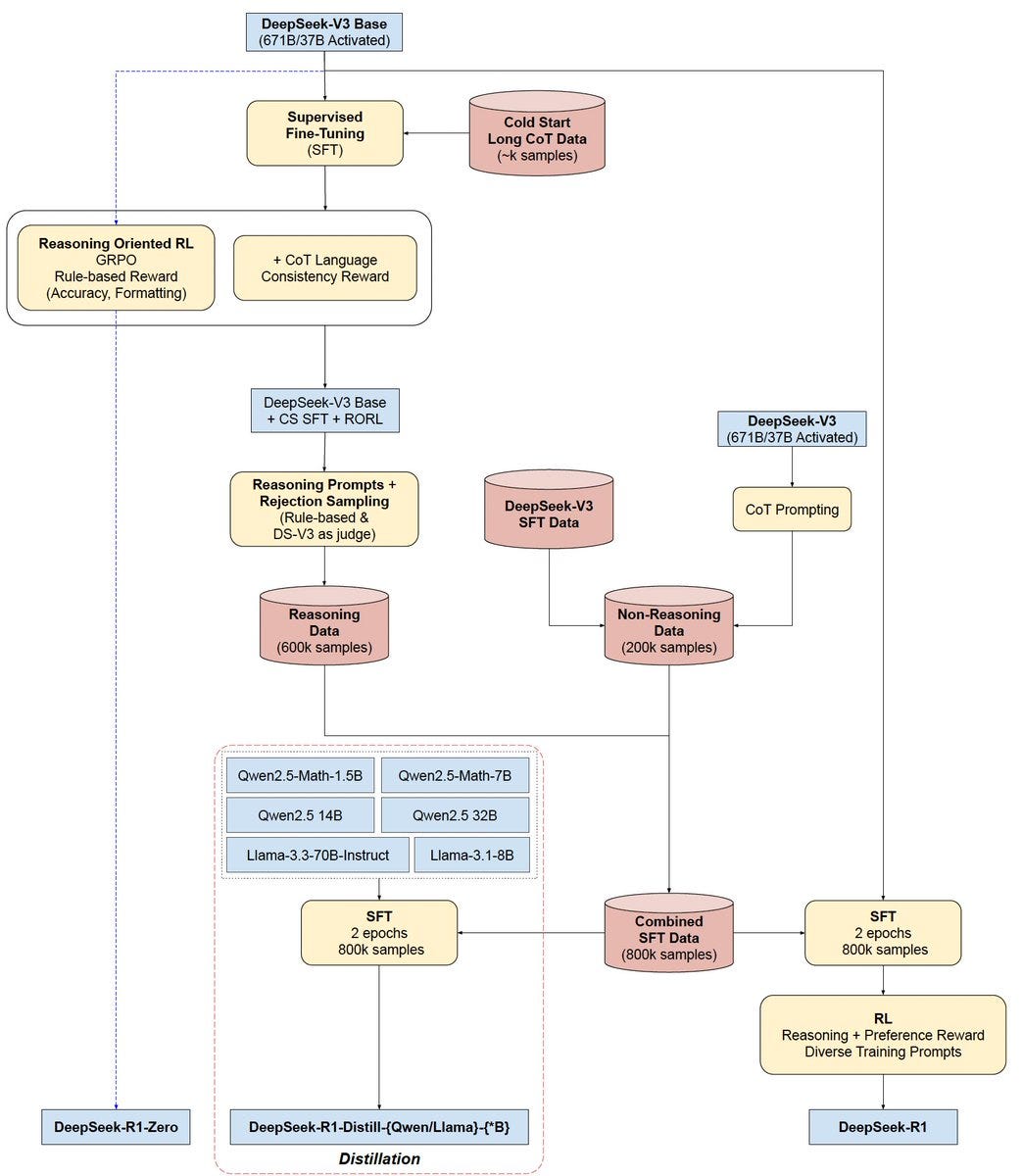

- Introduced groundbreaking innovations like Multi-Token Prediction, FP8 Mixed Precision Training, Distilled Reasoning Capabilities from R1 and Auxiliary-loss-free Strategy for Load Balancing.

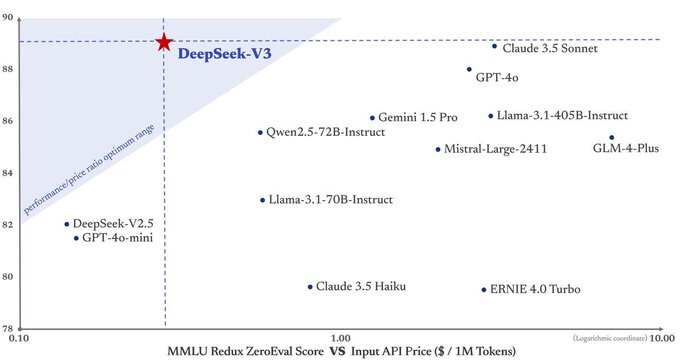

- API costs that are 20-50x cheaper than the competition:

- Deepseek: $0.14 / 1M in, $0.28 / 1M out

- OpenAI: $2.50 / 1M in, $10 / 1M out

- Anthropic: $3 / 1M in, $15 / 1M out.

This week, they were the first to release a fully open source reasoning model that matched OpenAI o1.

They shared their learnings publicly and revealed that they were able to train this model through pure Reinforcement Learning without needing Supervised Fine Tuning or Reward Modeling.

And the API costs are still 20-50x cheaper than the competition:

- DeepSeek R1: $0.14~$0.55 / 1M in, $2.19 / 1M out

- OpenAI o1: $7.50~$15 / 1M in, $60 / 1M out

In 2007, three engineers Xu Jin, Zheng Dawei, and Liang Wenfeng (CEO) met at Zhejiang University and bonded over algorithmic trading .<Download the research paper here.

DeepSeek Process

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.