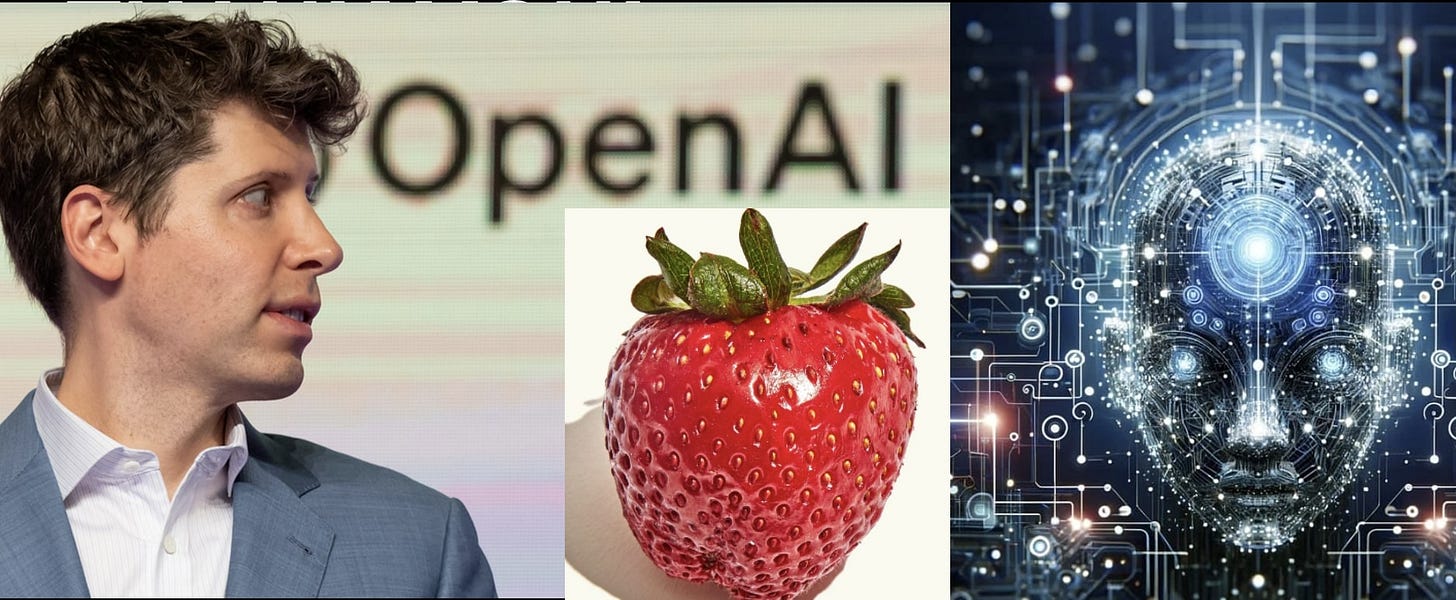

New Rumor of an OpenAI Next Level Reasoning Breakthrough

By Brian Wang

OpenAI's project Strawberry is focused on enhancing the reasoning capabilities of its AI models. Strawberry is rumored to be based upon the QStar AI advance. QStar was a reasoning advance that supposedly triggered the power struggle inside OpenAI and temporary firing of CEO Sam Altman.

Strawberry supposedly enables AI to perform long-horizon tasks (LHT), which require a model to plan ahead and execute a series of actions over an extended period of time. This technology aims to improve AI's ability to understand and interact with the world, allowing it to autonomously search the internet and conduct deep research. By improving how AI models think and plan, OpenAI hopes to unlock new levels of intelligence and bring AI closer to human-level intelligence or beyond.

ChatGPT maker OpenAI is working on a novel approach to its artificial intelligence models in a project code-named “Strawberry,” according to a person familiar with the matter and internal documentation reviewed by Reuters.</a> The project, details of which have not been previously reported, comes as the Microsoft-backed startup races to show that the types of models it offers are capable of delivering advanced reasoning capabilities.

How Strawberry works is a tightly kept secret even within OpenAI, the person said. The document describes a project that uses Strawberry models with the aim of enabling the company’s AI to not just generate answers to queries but to plan ahead enough to navigate the internet autonomously and reliably to perform what OpenAI terms “deep research,” according to the source.

Ilya Sutskever was the Chief Scientist at OpenAI, but he has left and formed his own company, Safe SuperIntelligence. Ilya knows about QStar and Strawberry. It would tough to keep this breakthrough secret. Others in the community are pursuing reasoning breakthroughs.

Two sources described viewing earlier this year what OpenAI staffers told them were Q* demos, capable of answering tricky science and math questions out of reach of today’s commercially-available models.

Reasoning is key to AI achieving human or super-human-level intelligence.

Strawberry includes a specialized way of what is known as post-training OpenAI’s generative AI models, or adapting the base models to hone their performance in specific ways after they have already been “trained” on reams of generalized data.

The post-training phase of developing a model involves methods like fine-tuning, a process used on nearly all language models today that comes in many flavors, such as having humans give feedback to the model based on its responses and feeding it examples of good and bad answers.

Strawberry has similarities to a method developed at Stanford in 2022 called "Self-Taught Reasoner” or “STaR”, one of the sources with knowledge of the matter said. STaR enables AI models to “bootstrap” themselves into higher intelligence levels via iteratively creating their own training data, and in theory could be used to get language models to transcend human-level intelligence, one of its creators, Stanford professor Noah Goodman, told Reuters.