Yann LeCun is both a giant in the field of AI but also a major skeptic of the ultimate potential of large language models. He is a major skeptic about large language models getting to TRUE AI (aka real AGI or actual superintelligence). He feels the LLM AI will not be able to learn the physics of the real world.

Yann LeCun is a French computer scientist regarded as one of the fathers of modern deep learning. In 2018, he received the Turing Award, often called the “Nobel Prize of Computing.” He is currently a professor at New York University and Chief AI Scientist at Meta (formerly Facebook), where he continues his research on machine learning algorithms. His work underpins today’s AI landscape, influencing technologies such as speech recognition, satellite image analysis, and recommendation systems.

He feels the neural networks of large language models will fail to go the next step. He thinks Tesla FSD will fail to solve robotaxi. This is despite thousands of Tesla cars driving without drivers from factory to loading dock in Fremont every day. Day or night. Rain or shine. There are also hundreds of thousands of Tesla driving without human drivers up to 85 meters for actual smart summon (parking to summoner) or dispatch summoner to parking spot. Summons and dispatches are happening every day in the US, Mexico, Canada and now China.

In June 2025, Tesla robotaxi breakthrough should see hundreds of thousands of cars without human drivers giving paid rides in Austin and then spreading around the world to millions of cars in 2026.

It took 6 billion miles of driving data to reach this point. A mile per minute of video. It is one minute to drive a mile at 60 miles per hour. Two minutes at 30 miles per hour. 6 billion miles of driving data is about 11000 years of driving data.

Yann LeCun (AI Legend but LLM AI Skeptic) says it takes 20 hours for a teenager to learn to drive. However, it takes 12 years of video data to train a child to get ready to learn to drive. Yann discusses how it takes a child 4 years to learn the basics of the world. So he knows it takes time to get real world knowledge and an internal world model before the learn to drive step can happen.

This means Tesla AI and FSD is 1000-10000 times less efficient than humans learning to drive. Although learning to drive perfectly (like the robotaxi goal of over 10 times safer than human) probably takes a human 5-10 years. Humans might never reach ten times safer than the average human. The near perfect driving standard means 500 times less efficient for AI. Let us assume 1000 times less efficiency for LLMs. ~50,000 years of video training data should be enough to train the real world and most real world learning of any kind that humans do.

This inefficiency could end up not mattering. IF we gather 100,000 years or millions of years of video to learn the real world. We then 1500x more compute than was used in Jan 2025 in about two to three years to process it. I think this will occur around 2027-2029. We use millions of cameras to gather data for 2-3 years, this will enable the collection of millions of years of video to train.

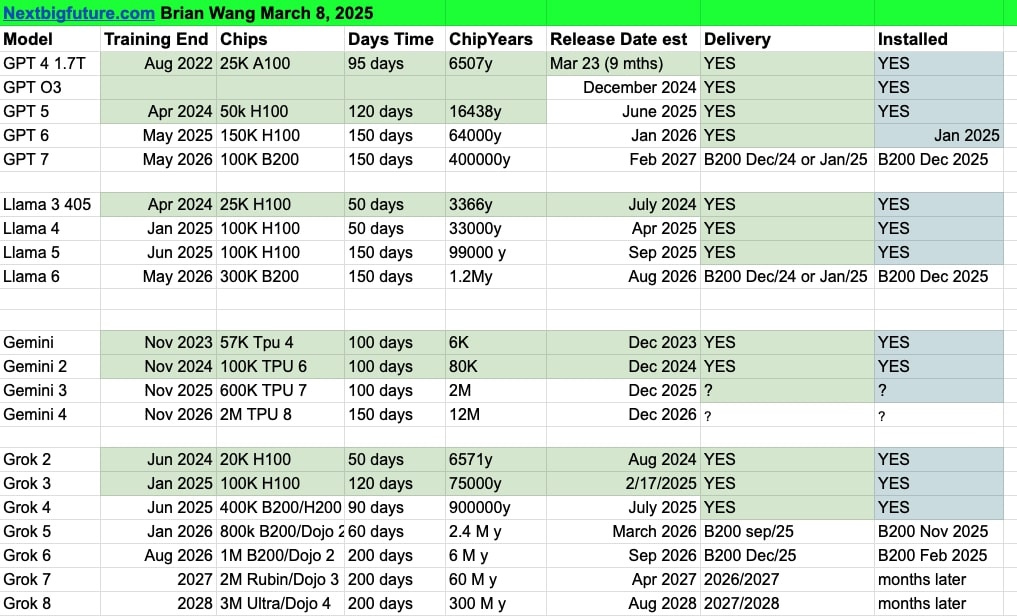

I have tracked the construction of the xAI data center in Memphis. I know xAI is adding gas turbines which will enable 1.2 GW of power around the end of this year. This will be in range of powering 1 million GPUs (Nvidia B200s). There are many better chips coming.

I have projected with rack density it is <a href="https://www.nextbigfuture.com/2025/03/construction-power-timeline-for-xai-to-reach-a-3-million-gpu-supercluster.html">possible to put 3 million chips into the Memphis xAI building</a>. There needs to be a tripling of power delivered to the building. This is also possible. This could be a 2027-2028 completion. 30X more chips than was used for Grok 3 (100k H100). Using Nvidia Ultra or Dojo 4 chips 100X better than H100. Training for 3 times longer. 9000X more compute. Other improvements to networking, memory, AI models.

This supercluster will need to process 20 quadrillion tokens of video and other data.

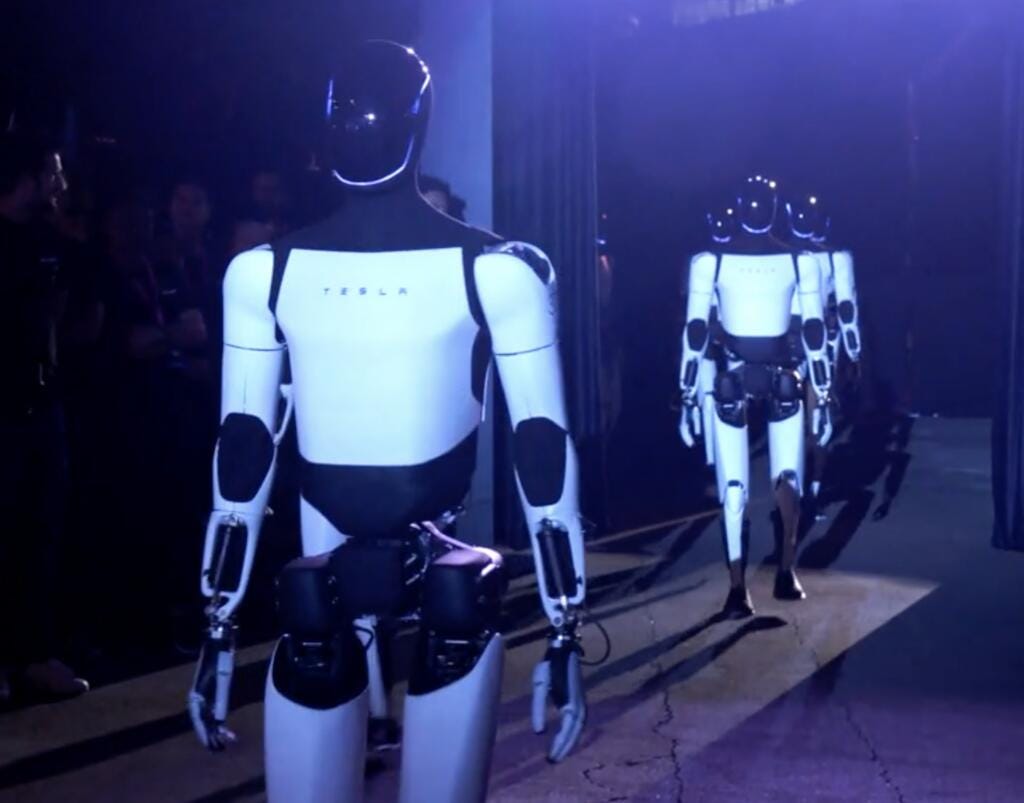

Tesla plans to make 1 million Teslabots in 2027. There will be 5000-10,000 this year. There should be 50,000-100,000 in 2026. This would mean 100,000 humanoid bot years of data and video for those 2026 bots.

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.