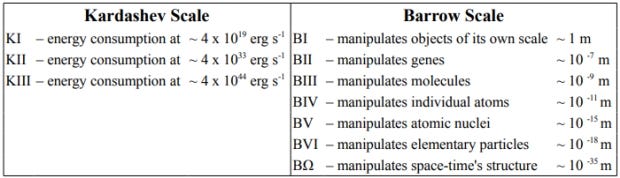

Humanity is on the verge of AGI (Artificial General Intelligence). Futurist Ray Kurzweil predicted decades ago that we would reach AGI in 2029. AI and Large Language Models could reach AGI sooner than 2029. However, the definitions of artificial intelligence that surpasses individual humans has issues around definitions and measurement.

Kurzweil also predicted the Singularity in 2045. He defined that as having cumulative artificial intelligence beyond the total intelligence of humanity.

Beyond the Singularity is Computronium and the limits of technology and the limits of computing.

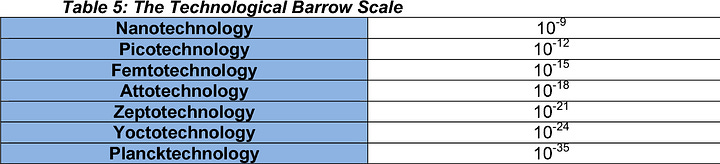

Computronium refers arrangements of matter that are the best possible form of computing device for that amount of matter. This can be the theoretically perfect arrangement of hypothetical materials that would have been developed using nanotechnology at the molecular, atomic, or subatomic levels.

There are several physical and practical limits to the amount of computation or data storage that can be performed with a given amount of mass, volume, or energy:

* The Bekenstein bound limits the amount of information that can be stored within a spherical volume to the entropy of a black hole with the same surface area.

* Thermodynamics limit the data storage of a system based on its energy, number of particles and particle modes. In practice it is a stronger bound than Bekenstein bound.

* Landauer’s principle defines a lower theoretical limit for energy consumption: kT ln 2 joules consumed per irreversible state change, where k is the Boltzmann constant and T is the operating temperature of the computer

* Reversible computing is not subject to this lower bound. T cannot, even in theory, be made lower than 3 kelvins, the approximate temperature of the cosmic microwave background radiation, without spending more energy on cooling than is saved in computation.

* Bremermann’s limit is the maximum computational speed of a self-contained system in the material universe, and is based on mass-energy versus quantum uncertainty constraints.

* The Margolus–Levitin theorem sets a bound on the maximum computational speed per unit of energy: 6 × 10^33 operations per second per joule. This bound, however, can be avoided if there is access to quantum memory. Computational algorithms can then be designed that require arbitrarily small amount of energy/time per one elementary computation step.

It is unclear what the computational limits are for quantum computers.

In The Singularity is Near, Ray Kurzweil cites the calculations of Seth Lloyd that a universal-scale computer is capable of 10^90 operations per second. This would likely be for the observable universe reachable at near light speed. The mass of the universe can be estimated at 3 × 10^52 kilograms. If all matter in the universe was turned into a black hole it would have a lifetime of 2.8 × 10^139 seconds before evaporating due to Hawking radiation. During that lifetime such a universal-scale black hole computer would perform 2.8 × 10^229 operations.

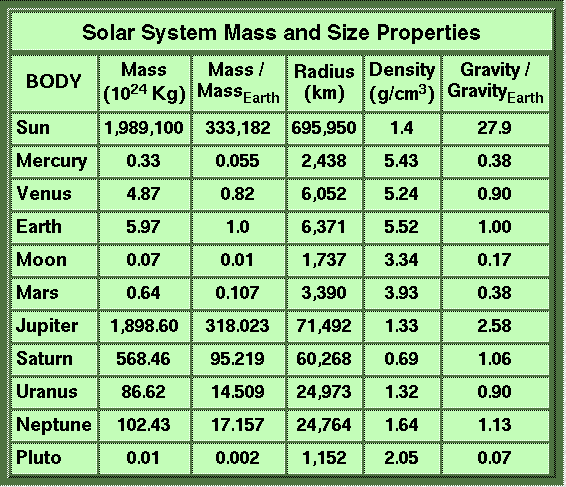

The mass of the solar system is 2 x 10^30 kilograms. A solar system scale computronium computer could be capable of 10^68 operations per second. This taking the mass of the sun, planets, moons, etc... and converting them into computronium. The global compute capacity is about 10^24 operations per second today (2024).

Human made mass is about 1.2 X 10^15 kilograms. 1150 gigatons of mass (2020) has been human made and one third of this was concrete.

Taking apart planets and sun is non-trivial. We have only dug tiny holes to a maximum of about 10 kilometers of depth.

Having the ability to make nanotechnology level computronium and having self replication to convert asteroids and meteors could be done within a few decades of achieving molecular nanotechnology. This would be about 10^23 kilograms in the asteroid and Kuiper Belt. Conversion of the asteroids and Kuiper belt objects into a computronium computer could be capable of about 10^50 to 10^60 operations per second.

Nvidia increased the amount of compute for AI (LLM) by about one million times over the past decade and they forecast another million times over the next decade. The trillions going into AI and Large Language models are funding the production of massive training and inference compute capacity.

If humanity were to keep building compute and energy to enable 1 million times more compute every decade then in about 50-60 years, humanity would need the asteroids and Kuiper belt converted into computronium to maintain the pace of AI growth.

The other possibly more likely event is that computational progress slows down at various points where scaling reaches major barriers.

Molecular Computers

In January, 2022, – The first molecular electronics chip was developed. This achieved a 50-year-old goal of integrating single molecules into circuits to achieve the ultimate scaling limits of Moore’s Law. Developed by Roswell Biotechnologies and a multi-disciplinary team of leading academic scientists, the chip uses single molecules as universal sensor elements in a circuit to create a programmable biosensor with real-time, single-molecule sensitivity and unlimited scalability in sensor pixel density.

Keep reading with a 7-day free trial

Subscribe to next BIG future to keep reading this post and get 7 days of free access to the full post archives.